Continuous Deployment for Downloadable Client Software

Continuous Deployment is the practice of shipping your code as frequently as possible. While relatively straightforward when applied to a production deploy as is common for websites and services, when applied to traditional client side applications there are three big problems to solve: the software update user experience, the collection and interpretation of quality metrics, and surviving the chaos of the desktop environment.

The first problem with Continuous Deployment for downloadable client software? It’s a download! Classically, the upgrade process is: The user decides to update, finds the software’s website again, downloads the newer version and runs the installer. This requires the user to remember that the software can be upgraded, find a need for it to be upgraded and determine it’s worth the effort and risk of breaking their install. When OmniFocus was in beta the developers were releasing constantly, many times per day! While the upgrade was manual and you had to remember to do it, the whole process worked well because the selected users were software-hip and often software developers themselves. I have nothing but praise for the way The Omni Group rapidly developed and deployed; plus they published a bunch of statistics! Still, there are clearly better ways to handle that stream of upgrades.

Software Update Experience

For successful Continuous Deployment, you need as many users as possible on your most recent deploy. There are a few models for increasing upgrade adoption, and I’ll list them in order of effectiveness.

Check for updates on application startup When you run the software, it reaches out to your download servers and checks for a new version. If available, it provides an upgrade prompt. These dialogs are most useful when they can sell the user on the upgrade, then it feels like a natural process “I’m upgrading because I want to use new feature Y”. These prompts can become extremely annoying, depending on where in the user’s story your application starts.This is the process IMVU uses today, with all of it’s pros and cons. The best case user story is: The user remembers she wants to hang out on IMVU and launches the client. She notices we’ve added a cool new feature and decides to upgrade. The process is fast and relatively painless. The worst case user story is: The user is browsing our website and clicks a link to hang out in a room full of people he finds interesting. On his way to the room, he’s gets a dialog box with a bunch of text on it. He doesn’t bother reading because it doesn’t look related at all to what he’s trying to do. He clicks yes because that appears to be the most obvious way to continue into his room. He’s now forced to wait through a painfully slow download, a painfully slow install process and far to many dialogs with questions he doesn’t care about. By the time he makes it into the room no one is there. The update process has completely failed him. Let’s just say there is definitely room for improvement.

Bundle an Update Manager This is the approach taken by Microsoft, Apple and Adobe to name a few. Upgrades are automatically downloaded in the background by an always-running background process and then at the user’s pace they’re optionally installed. While this could be theoretically a painless process, the three vendors I’ve named have all decided it’s important to prompt you until you install the upgrades. This nagging becomes so frustrating that it drives users away from the products themselves (personally, I use FoxIt Reader just to avoid the adobe download manager).

Download in the background, upgrade on the next run The FireFox approach, downloads happen it the background while you run the application. When they’re finished you’re casually prompted once and only once if you’d like to restart the app now to apply the upgrade. If you don’t, the next time you run FireFox you’re forced through the prompt-less update process. A huge improvement over constant nags and useless-prompts filled installers. Updating FireFox isn’t something I think about anymore, it just happens. I would call this the gold standard of current update practices. We know it works, and it works really well.

Download in the background, upgrade invisibly This is the Google Chrome model. When updates are available they’re automatically downloaded in the background. They’re upgraded, and as far as I can tell they’re applied invisibly as soon as the browser is restarted. I’ve never seen an update progress bar and I’ve never been asked if I wanted to upgrade. Their process is so seamless that I have to research and guess at most of the details. This has huge benefits for Continuous Deployment, as you’ll have large numbers of users on new versions very quickly. Unfortunately this also means users are surprised when UI elements change, and are often frustrated.

Download in the background, upgrade the running process Can you do better than Google Chrome? I think you can. Imagine if your client downloaded and installed updates automatically in the background, and then spawned a second client process. This process would have it’s state synced up with the running process and then at some point it would be swapped in. This swap would transfer over operating system resources (files and sockets, maybe even windows and other resources depending on operating system). Under this system you could realistically expect most of your users to be running your most recent version within minutes of releasing it; meeting or exceeding the deploy-feedback cycle of our website deploy process.I’m guessing Chrome is actually close to this model. A lot of the state is currently stored in a sqlite database making the sync-up part relatively easy. The top level window and other core resources are owned by a small pseudo-kernel. You could easily imagine a scenario where deploys of non-pseudo-kernel changes could instantly update while pseudo-kernel changes would happen on next update. For all I know Chrome is doing that today! This doesn’t address, and in fact exacerbates UI and functionality changing friction.

Success Metrics

Unlike a production environment, you don’t control any of the environmental variables. You’ll face broken hardware, out of memory conditions, foreign language operating systems, random dlls, other processes inserting their code into yours, drivers fighting for first-to-act in the event of crashes and other progressively more esoteric and unpredictable integration issues. Anyone who writes widely-run client software quickly models the user’s computer as an aggressively hostile environment. The examples I gave are all issues IMVU has had to understand and solve.

As with all hard problems, the first step is to create the proper feedback loop: you need to create a crash reporting framework. While IMVU rolled it’s own, since then Google has open sourced their own. Note that users are asked before crash reports are submitted, and we allow a user to view their own report. The goal is to get a high signal to noise chunk of information from the client’s crashed state. I’ve posted a sample crash report, though it was synthetically created by a crash-test. I hope no one notices my desktop is a 1.86ghz processor… Of note, we collect stacks that unwind through both C++ and Python through some reporting magic that Chad Austin, one of my prolific coworkers, wrote and is detailing in a series of posts. In addition to crash reporting, you’ll need extensive crash metrics and preferably user behaviour metrics. Every release should be A/B tested against the previous release, allowing you to prevent unknown regressions in business metrics. These metrics are a game changer, but those details will have to wait for another post.

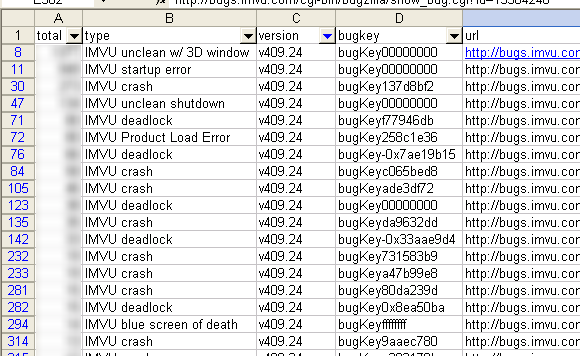

A screenshot of our aggregate bug report data

A screenshot of our aggregate bug report data

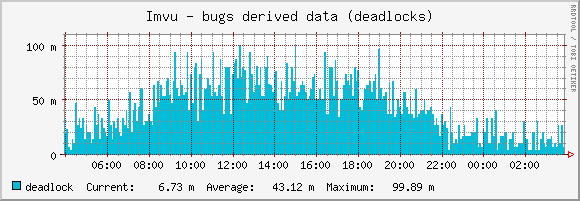

If your application requires a network connection you’ve been gifted the two best possible metrics: logins and pings. Login metrics let you notice crashes on startup or regressions in the adoption path. These are more common than you think when they can be caused or exacerbated by 3rd party software or windows updates. Ping metrics let you measure session length and look for when a client stopped pinging without giving a reason to the server. These metrics will tell you when your crash reporting is broken, or when you’ve regressed in a way that breaks the utility of the application without breaking the application itself. A common example of this are deadlocks, or more generically stalls. The application hasn’t crashed but for some reason isn’t progressing. Once you’ve found a regression case like that you can implement logic to look for the failure condition and alert on it, to fail fast in the event of future regressions. For deadlocks we wrote a watcher thread that polls the stack of the main thread, if it hasn’t changed for a few seconds then we report back with the state of all of the current threads. In aggregate that means graphs that trend closely with our user’s frustration.

Deadlocks or stalls, measured in millistalls (thanks nonsensical Cacti defaults)

Deadlocks or stalls, measured in millistalls (thanks nonsensical Cacti defaults)

Once you have great metrics, you have to strike a balance between asking customers to endure an update and gaining the feedback from your crash reporting and business metrics. For IMVU’s website deployment process we had a 2-phase roll out, similarly for Client development we have “release track” and “pre-release track”, where releases are version X.0 and pre-releases are subsequent dot releases. We ship a pre-release per day, and a full release every two weeks. Existing users are free opt-in and opt-out of the pre-release track. Newly registered users are sometimes given a pre-release as part of an A/B experiment against the prior full release, but are then offered the next full release and do not stay in the pre-release track. Google Chrome is another example of this model. By default you’re given the stable channel which is a quarterly update in addition to security updates. You can opt-in to the beta channel for monthly updates or the dev channel for weekly updates.

The harsh reality of the desktop environment

Once you’re measuring your success rates in the wild and deploying regularly, you’ll get the real picture of how harsh the desktop landscape is. Continuous Deployment changes your mindset around these harsh realities: code has to survive in the wild, but you also must engineer automated testing and production measurement to ensure that changes won’t regress when run in a hostile environment.

Hostile Hardware

To start, software you write and deploy will have to survive on effectively hostile hardware and drivers. For a 3d application, that most commonly means crashes on startup, crashes when a specific 3d setting is used or jarring visual glitches. Drivers and other software on windows have a far-too-common practice of dynamically linking their own code into your process. Apart from being rude, this can lead to crashes in your process in code you didn’t write or call and can’t reproduce without the same set of hardware and drivers. Needless to say, crash reports contain an enumeration of hardware and drivers.

Running in an unknown environment means dealing with the long-tail of odd configurations: systems with completely hosed registries, corporate firewalls that allow only HTTP and only port 80, antivirus software being nearly malicious, virus software being overtly malicious and motherboards that degrade when they heat up just to name a few. These problems scale up with your user-base, and if you choose to ignore “incorrectly” configured computers you’ll end up ignoring a surprisingly large percentage of your would-have-been customers.

Go to the source

Dealing with these issues is compounded by the fact that you have minimal knowledge about the computers that are actually running your software. Sometimes the best metrics in the world aren’t enough. For IMVU that meant we were forced to go as far as buying one of our user’s laptop. She was a power user who heavily used our software and ran into its limitations regularly. The combination of her laptop and her account would run into bugs we couldn’t reproduce on the hardware we had in house. We purchased her laptop instead of just buying the same hardware configuration because she was gracious enough to not wipe the machine; we were testing with all of the software she commonly ran in parallel. This level of testing takes a lot of customer trust, and we’re truly indebted to her for allowing us the privilege of that kind of access.

We also looked at our client hardware metrics and the Unity hardware survey. We cobbled together our 15th percentile computer. This is a prototypical machine which is better than 1/8th of our user’s hardware: 384mb of ram, a 2ghz Pentium 4 and no hardware graphics acceleration. These machines commonly reproduced issues that our business class dell boxes never would. Many of our users have intel graphics “hardware”, which is so inefficient at 3d that it’s a better experience to render our graphics in pure software. Ideally we’d run automated tests on these machines as part of our deploy process, but we’re not there yet. Our current test infrastructure assumes that you can compile our source code in reasonable time on the testing machine.

Before I end this post I’d like to add a few words of caution. If you’re deploying client software constantly then you’re relying on a small set of code to be nearly perfect: your roll back loop. In a worst case scenario, a client installer was shipped that somehow breaks the user’s ability to downgrade the client. In the absolute worst case, that means breaking the machine completely; let’s hope you won’t have to create a step by step tutorial of how recreate your boot.ini. Every IMVU client release is smoke tested by a human before being released.

It’s a much rougher environment for Continuous Deployment on client software. There’s non-obvious deploy semantics, rough metrics tracking, and a hostile environment all standing in the way of shipping faster. Despite the challenges Continuous Deployment for client software is both possible, and has the same return of Continuous Deployment elsewhere: better feedback, faster iteration times and the ability to make a product better, faster.